It’s not uncommon to hear schools express a view that the attainment of their intake is systematically over estimated or ‘inflated’ in some way. Schools are not accusing another of cheating; simply that high stakes accountability pushes teachers to ensure children achieve the best result possible. And where a feeder school outperforms expectations for an entire class, those receiving the pupils in September are given a very difficult hurdle to jump over.

Is there any systematic evidence that certain teachers assess children optimistically on Key Stage tests in England? (There is plenty of evidence they do in the US). Here, we look at Key Stage One assessment, which is particularly interesting for two reasons. First, it is teacher-assessed, although this was not always the case, and so there is room for a great deal of discretion as to how Levels are assigned. Second, it takes place at age 7 and is treated as a baseline metric for judging progress in primary schools, and yet is an outcome measure for those in stand-alone infant schools.

Why is Key Stage One assessment so different in infant and primary schools?

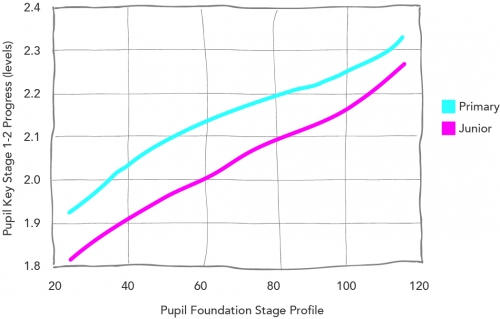

Junior schools regularly claim that the Key Stage One scores assigned by infant schools are unrealistically high, making it hard for them to achieve good Key Stage One-Key Stage Two progress compared to all-through primary schools. Junior schools do make relatively poor progress at Key Stage Two, but does the blame lie with infant schools ‘optimistically’ inflating their pupil grades?

Pupil progress in junior schools is poor

Note: we band the Foundation Stage Profile total point scores into groups of 10

At first glance, junior schools appear to have a point: infant schools look suspiciously effective when judged on their Foundation Stage Profile-Key Stage One progress relative to primary schools. And the split infant-junior systems are no more or less effective than all-through primary schools when progress from age 5 to age 11 is measured.

Infant schools achieve high progress, yet the split infant-junior system is no different overall

It seems highly unlikely that infant schools are systematically effective institutions whilst junior schools are systematically ineffective institutions. So, are infant schools indeed inflating their results, or does the problem lie elsewhere?

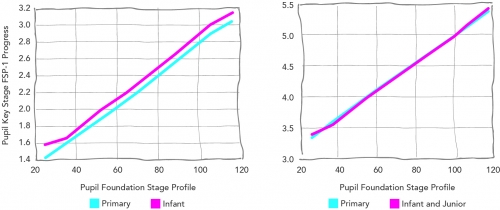

Schools responded differently to the switch from external marking to teacher assessment

Key Stage One assessments were externally marked tests until 2003, after which it was left to schools themselves to measure pupil attainment. Before 2003, infant schools achieved only slightly higher Key Stage One scores than primary schools, but after teacher assessment was introduced, their scores started to diverge strongly.

Infant and primary school Key Stage One scores really diverge after teacher assessment begins

The pattern of this divergence is very clear. There is little evidence that infant schools are taking advantage of teacher assessment to inflate the scores they give pupils. Instead, teacher assessment in primary schools produces lower judgements of Key Stage One attainment, thus lowering their bar to show impressive pupil value-added at Key Stage Two.

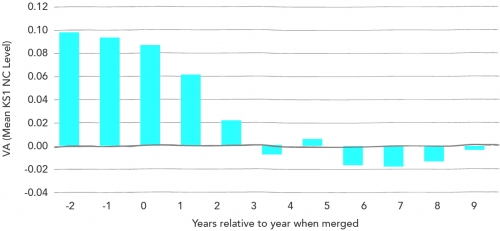

Incentives change if infant schools are reorganised into primary schools

We can also use the large number of reorganisations from split infant-junior schools into all-through primary schools to see how Key Stage One assessments change over time. We see exactly the same pattern: the apparent effectiveness of infant schools, as measured by their Foundation Stage Profile-Key Stage One value-added, slips away once they become part of a primary school.

High value-added of infant schools fades away when reorganised into primary schools

Looking across the individual Key Stage sub-levels, it is clear that this pattern is most pronounced for the assessment of high-attaining 7-year-olds. Once teachers are asked to assess pupils themselves, they become particularly cautious in assigning a Level 3. Primary school headteachers have often complained the conversion of Levels into marks by the Department for Education encourages this, since all Level 3 children are judged equal; at Key Stage One there is no such thing as a sub-Level 3C. Furthermore, the conceptualisation of what it means to be ‘working at Level 3’ may be different in a primary school where teachers work across Key Stages One and Two.

So, junior schools are, in part, vindicated by our analysis. It is indeed more difficult for them to achieve high Key Stage One-Key Stage Two progress, having received their Key Stage One assessments from infant schools. But perhaps they are wrong to point the finger of blame at their feeder school partners. Instead, the problem appears to lie with primary schools who depress their teacher-assessed Key Stage One baselines to achieve the best possible progress results at Key Stage Two. This is not cheating of the sort we see in the US: teacher assessment of young children is highly subjective and faced with enormous incentives not to give a child the benefit of the doubt for an answer to a question, it is not surprising that primary schools choose to err on the side of caution.

One of the quirks of the National Curriculum for England is that while levels of attainment were always intended to be independent of the age of the student, the programmes of study were age-specific. The result is a strange system in which Level 3 (say) is meant to denote the same level of achievement whether it is based on the programmes of study for Key Stage One or that for Key Stage Two. The question, therefore, is what the ‘correct’ interpretation of Level 3 should be. Junior schools have claimed that the assessments made by infant schools are somehow inflated, but the analysis above presents a strong argument that these claims have little merit. Indeed, if there is any distortion in the system, it is that levels given to students at the end of Key Stage One in primary schools are lower than they should be, so that it is the estimates of value-added in Key Stage Two that are inflated.

Dylan Wiliam, Emeritus Professor of Educational Assessment, UCL Institute of Education

This analysis shows how difficult it is for someone making a judgement not to be influenced by their knowledge of the use to which the judgement will be put. As assessment data is put to more uses, it becomes even more important to make sure that the context is recognised when the data is interpreted. Understanding context can also help to make sure that we have realistic expectations of assessment programmes.

Amanda Spielman, Chair of Ofqual and Education Advisor to ARK

I have been around long enough to remember the introduction of the National Curriculum and Key Stage testing in the early 90s, and the words of one trainer have stayed with me. She put some water into a glass and then put a pencil into it to measure the level – Key Stage One. She topped it up with more water and measured it again – Key Stage Two. Then she repeated the exercise – Key Stage Three. And her comment was, “But, as we all know, children leak…”. Knowing this, it is understandable that teachers in primary schools feel cautious about assessing high levels of attainment at Key Stage One that then translate into higher targets for all of their teaching colleagues.

Jill Berry, Educational consultant and former headteacher

Leave A Comment