This is the third post in a series based on data collected by FFT on the GCSE grades which secondary schools in England proposed for their pupils. For more details on the data collected and the overall findings, read the first post in this series

The first post in this series looked at changing grade distributions when actual 2019 GCSE results are compared to provisional centre assessment grades for 2020. It found that these proposed grades were, on average, higher than those awarded in exams last year – though, as we stated there, these may not be the final grades which schools will have submitted to the exam boards.

We might wonder what the aggregate effect on schools’ results would be if the proposed grades we looked at were awarded this summer.

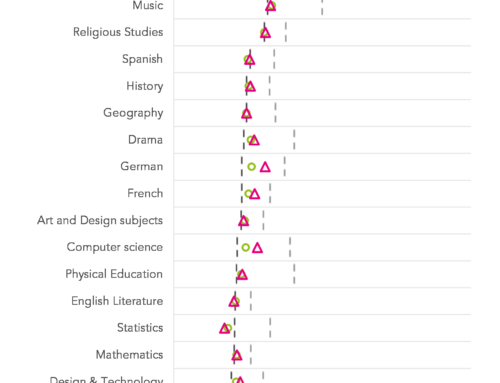

The following chart shows how much each school’s results would change by between 2019 and 2020 if that were the case on a subject-by-subject basis.

As in the first post, it’s restricted to schools with more than 25 entries in a subject, and to subjects with 100 such schools entering them.

This chart follows the same format as Ofqual’s annual centre variability charts. These tend to show a fairly symmetrical distribution around zero, with similar numbers of schools recording increases in a given subject as numbers recording decreases. Ours, by contrast, are skewed to the right to a greater or lesser extent, with more extreme values (increases of 75 percentage points in attainment at a given threshold, in some cases).

Number of subjects that increase

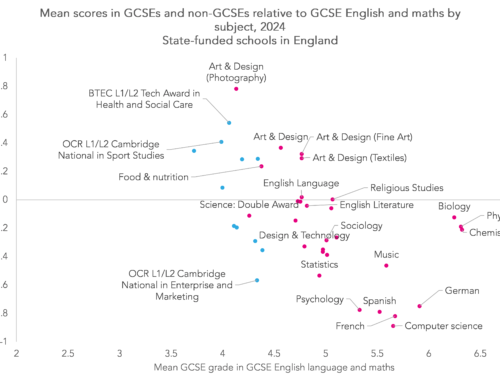

It’s also interesting to consider the number of subjects which would increase or decrease in each school were these preliminary grades actually awarded.

In the charts that follow, we’ve defined an increase in results as an increase of five percentage points in the share of pupils achieving grade 4 or above in a given subject from one year to the next, and a decrease as a drop of five percentage points or more. (Results that change by no more than 5 percentage points are considered to be stable.)

We’ve compared schools’ actual 2019 results to their 2018 results, and the proposed grades we have from schools for 2020 to their actual 2019 results – restricting the analysis to GCSE subjects that were graded 9-1 in 2018 to make comparison possible. Only schools that had more than 25 entries in eight or more different subjects are included.[1]

As can be seen in the charts on the left, the number of subjects which schools saw results increase in, and decrease in, between 2018 and 2019 more or less mirrored one another, as we’d expect.

But, comparing 2019 results and proposed 2020 grades, a number of things are clear. The most common, or modal, number of subjects in which schools would register an increase would go up from five to seven, using these proposed 2020 grades. Results would increase in every subject for 69 schools, out of around 1,500 that feature in this analysis.

Most strikingly, the number of subjects in which schools’ results would be considered to have fallen would drop greatly. Were these 2020 grades awarded this August, two-thirds of schools wouldn’t see a material decrease in their results in a single subject.

Conclusions

As we wrote in the first post in this series, the task that has faced schools and teacher has been enormous, with little guidance available on how to allocate grades to pupils.

But if these grades are anything like those submitted to the exam boards, then Ofqual will have a tricky task bringing this year’s results in line with previous years’. Without any objective evidence on the reliability of grading at each school, the most difficult part will be finding a way of doing this fairly for pupils in schools which submitted lower results, when some other schools will have submitted somewhat higher results.

Want to stay up-to-date with the latest research from FFT Education Datalab? Sign up to Datalab’s mailing list to get notifications about new blogposts, or to receive the team’s half-termly newsletter.

1. Around 1,500 schools feature in the comparison of 2019 and 2020 – so a slightly smaller group of schools than we’ve looked in the rest of our analysis. Around 2,400 feature in the comparison of published 2018 and 2019 figures.

Given your conclusions about allowing for lower and higher school assessed grades and reports that A Levels had to be adjusted down by c40% to hit a year on year improvement:

Is my assumption right that some schools will have submitted results in line with 2018 and 2019 and will therefore in theory have a result that is “correct” and comparable to past years whether it’s GAG based or algorithm based (assuming the algorithm worked). However other schools will have been overly ambitious and will therefore have a CAG based result in excess of 2018 and 2019 (algorithm will be meaningless) meaning it’s incomparable and also advantageous to the initial example?

I write not knowing where my daughter or her school sit on the lower / higher grade position and am genuinely worried about the situation.