This is the second part of a series examining the effect of non-response on PISA 2018 results for the United Kingdom. Part 1 can be found here and the final part can be found here.

In my new paper, released here today and forthcoming in the Review of Education, I report what I consider to be a worrying lack of transparency surrounding some aspects of the reporting of the PISA 2018 data for the UK.

In this blogpost, I focus upon the non-response bias analysis conducted in England and Northern Ireland – but which didn’t get reported.

Non-response bias analysis

PISA requires that 85% of sampled schools to agree to take part in the study. If a country falls below this level then a “non-response bias analysis” must be conducted. The fear is that certain types of schools (e.g. those with lower-achieving pupils) may be more likely to not participate – leading to biased results.

In PISA 2018, both England (72%) and Northern Ireland (66%) failed to meet the OECD’s school response rate criteria [1].

But, rather than publish these bias analyses in full, the PISA reports published by the OECD, England and Northern Ireland simply stated their interpretation of the results.

For instance, in England it was stated how the:

“analysis investigated differences between responding and non-responding schools and between originally sampled schools and replacement schools. This supported the case that no notable bias would result from non-response.”

While all that was said for Northern Ireland was that:

“The results of both NRBAs [non-response bias analyses] were positive meaning that the samples for UK and NI were representative and not biased.”

What on earth does this mean!? How was this analysis conducted and by whom? What does “positive results” mean? Who exactly reviewed this document? How was this judgement reached?

I have ended up having to use freedom of information legislation to find out exactly what was going on.

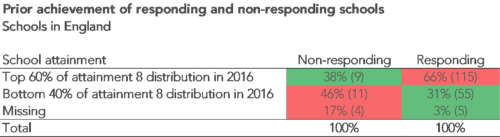

A summary of one of the key points coming out from this can be found in the table below, comparing historical GCSE achievement of responding and non-responding schools in England. As this table clearly illustrates, schools with historically lower levels of achievement tended to be less likely to respond.

Personally, I don’t view this as being “positive” or as indicating that the sample is “not biased” in any way.

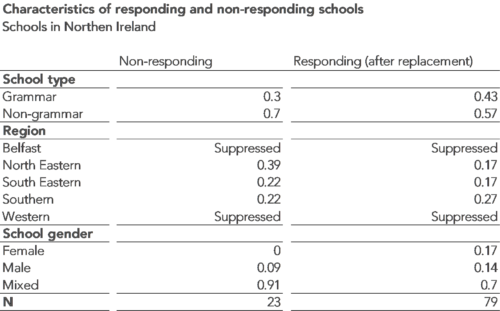

The next table presents the equivalent investigations that were conducted in Northern Ireland (also obtained via a freedom of information request). As this illustrates, in Northern Ireland, non-grammar schools (who tend to have lower-achieving intakes) were also less likely to respond.

Yet, otherwise, the “bias analysis” conducted seems to be extremely limited. Only a handful of school characteristics are considered, most of which are not strongly associated with pupils’ academic achievement. In other words, the analyses undertaken – particularly in the case of Northern Ireland – do not really provide much information as to whether the high levels of school non-response may have led to bias creeping into the sample.

Conclusions

I disagree with how the OECD, Department for Education and Northern Irish government have interpreted the results from the bias analyses conducted.

At best, the evidence is inconclusive. At worst, it points towards schools with lower-achieving pupils being less likely to take part.

Such evidence is always open to interpretation, of course. And I appreciate others may take a different view.

What is inexcusable, however, is that such information was not openly and transparently published by the OECD – and as part of England’s and Northern Ireland’s national reports – in the first place.

In my view, the UK’s Office for Statistics Regulation should conduct an independent investigation into why this important information was allowed to be hidden away.

Now read the final part of the series.

- Before replacement response rates reported.

Want to stay up-to-date with the latest research from FFT Education Datalab? Sign up to Datalab’s mailing list to get notifications about new blogposts, or to receive the team’s half-termly newsletter.

It’s not the first time that non-response bias has cast doubt on the reliability of OECD data. The first international Adult Skills Survey, hailed by the media as proof that England’s education system had ‘failed’, was beset with non-response problems. The OECD requested Non-Response Bias Analyses (NRBAs) but England and Northern Ireland failed to do all of them. I wrote to OECD asking if the data for England/Northern Ireland should have been withheld. I also asked if it was likely that the data for England/Northern Ireland would be redacted. The OECD replied saying they judged the data to be of sufficient quality to be published despite the NRBAs not being fully completed. I disagree. I wrote about it here: https://www.localschoolsnetwork.org.uk/2013/10/oecd-stands-by-adult-skills-survey-result-for-englandnorthern-ireland-but-still-advises-caution-in-the-use-of-the-data