Earlier this month I published a blogpost which showed some results of work I’ve been doing on an Economic and Social Research Council-funded project into pupils’ pathways through the education system.

The blogpost looked at outcomes in later life for pupils who took GNVQs in the early part of the century compared to similar pupils in schools which did not offer GNVQs.

I’m going to perform a similar exercise in this blogpost and look at another type of qualification: Level 2 applied science.

A very brief history of science qualifications

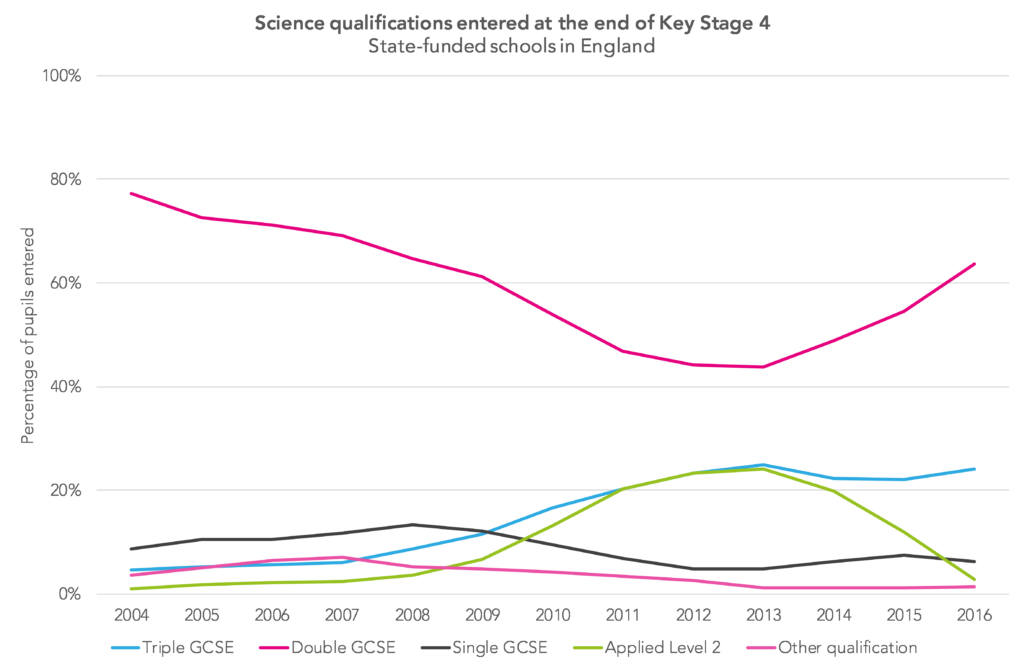

First of all, let’s look at the types of science qualifications entered by pupils over the period from 2004 to 2016 – shown in the chart below.

In the earliest of the years shown, double GCSE dominated. Its popularity diminished in response to a rise in other options, such as triple GCSE (biology, chemistry, physics) and applied Level 2 qualifications.

The most common of these applied Level 2 qualifications were the BTEC first certificate (equivalent to two GCSEs) and BTEC first diploma (equivalent to four GCSEs), while smaller numbers took OCR Nationals, which came as either an award (equivalent to two GCSEs) or as a certificate (equivalent to four GCSEs).[1]

The popularity of these qualifications began to take-off from 2009. By 2013 almost a quarter of pupils entered one.

But schools quickly switched back to GCSEs from 2013 onwards in response to the Wolf review of vocational education and the introduction of Progress 8.

The data

Around 38,000 16-year-olds entered a Level 2 applied science qualification in 2009, roughly 7% of the population in state-funded schools.

Using the same methodology as in the GNVQ blogpost, I match these pupils to similar pupils at schools which did not offer a Level 2 applied science qualification in 2009.[3]

So in our comparison group, there are pupils taking a variety of other (mostly GCSE) science options, including those taking no science qualification.

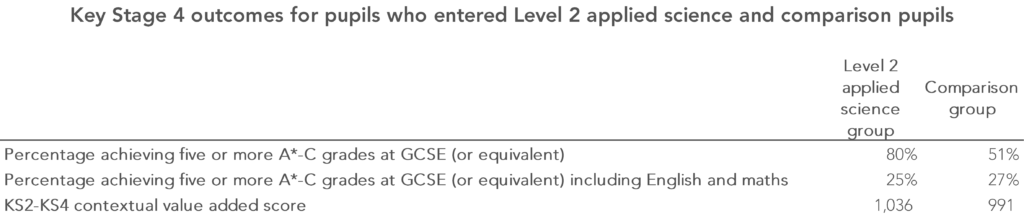

Key Stage 4 outcomes

On the surface, pupils entering Level 2 qualifications in applied science were more likely to have achieved five or more A*-C GCSE grades (or equivalent) than similar pupils in the comparison group. However, this advantage disappears when GCSE English and maths were included in the measure. This is exactly the same finding as the GNVQ blogpost, which was based on the 2002 cohort of 16-year-olds.

Between 2002 and 2009, the five or more A*-C GCSEs or equivalents including English and maths measure became the dominant performance measure for secondary schools. We had the first floor standard – that at least 30% of pupils in every school should achieve this standard – among other things.

So, unlike in the early GNVQ days, entering pupils for Level 2 applied science to boost A*-C passes would yield no benefit unless pupils also achieved A*-C grades in both English and maths.

However, 2009 was the days of contextual value added (CVA). This was a value added calculation, like Progress 8 is today, but which went much further and took into account pupil and school characteristics such as disadvantage.

And the average CVA score for the group who took Level 2 applied science qualifications in 2009 was 1,036.

This would have prompted a reaction similar to a Progress 8 score of +0.5 or higher these days.

Roughly speaking, it meant that pupils achieved, on average, half a GCSE grade (or equivalent) higher per qualification included in their capped “best eight” qualifications than other similar pupils.[2]

So, on the surface, impressive. And perhaps not exclusively due to taking Level 2 applied science.

But did these apparently better results translate into better outcomes later in life?

Subsequent outcomes

As in the GNVQ blogpost, I look at five subsequent outcomes:

- Achievement of NQF Level 3 (two A-Levels or equivalent) by age 19. Source: National Pupil Database post-16 achievement data

- Achievement of two A-Levels by age 19. Source: National Pupil Database post-16 achievement data

- Higher education participation by age 22. Source: Higher Education Statistics Agency pupil record

- Employed for at least 180 days in 2015/16 (age 22). Source: matched HMRC employment (P45) data

- Earnings at age 22. Source: matched HMRC earnings (P14) data

There are some limitations, however. None of the data sources captures emigration or death. The HMRC data excludes those who are self-employed.

Some young people drop out the various data sources used. I therefore calculate an attrition indicator, measuring the proportion of individuals not observed in any source after age 22.

I also report the percentage for whom we have earnings data in 2015/16. When earnings are reported, we only include those for whom we have earnings data.

The results

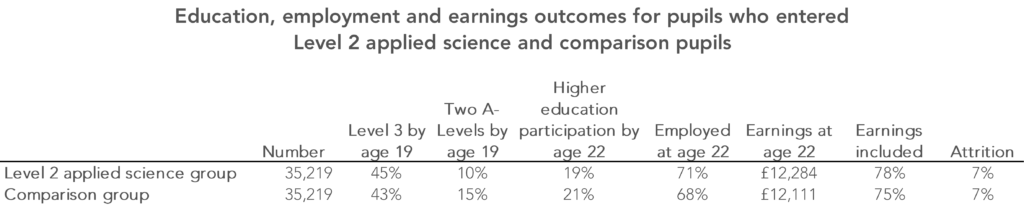

Sure enough, the apparently stronger Key Stage 4 outcomes are not realised as particularly stronger subsequent outcomes. As the table below shows, the group who entered Level 2 applied science tended to have slightly better employment and earnings outcomes but a lower rate of higher education participation.

Replace “Level 2 applied science” with “GNVQ” in the above paragraph and you have the same conclusion as the blogpost about GNVQ.

Interestingly, I find very similar results if I restrict the comparison group to those who entered at least two GCSEs in science, as the table below shows.

So at a macro-level, it doesn’t appear that Key Stage 4 science choices made a huge amount of difference to outcomes in later life for this group of pupils.

That’s on average – it doesn’t necessarily follow that it’s true for every individual.

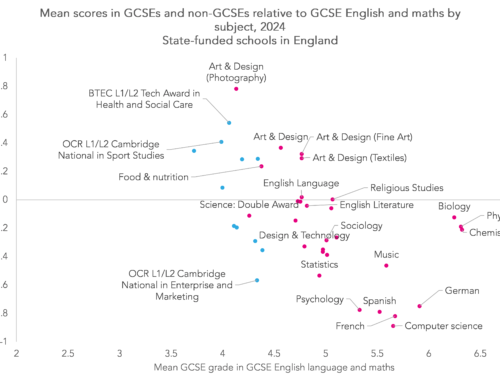

But it does appear that Level 2 applied science qualifications were over-valued in performance tables, lending weight to the argument that we have never got the equivalence right between GCSE and non-GCSE qualifications for the purposes of school accountability.

Want to stay up-to-date with the latest research from FFT Education Datalab? Follow us on Twitter to get all of our research as it comes out.

The support of the Economic and Social Research Council is gratefully acknowledged.

1. CVA calculations were complicated slightly by “bonuses” for English and maths results, whether or not those qualifications were among a pupil’s best eight results.

2. Level 1 versions of these qualifications (equivalent to grades D-G at GCSE) were also available but were only taken by a handful of pupils.

Relatively small percentages of pupils also took other types of qualification, such as single science, double award vocational GCSE, and entry level certificates.

In addition, small percentages – running from 6% in 2004 to 3% in 2016 – took no science qualifications. In rare situations, pupils entered more than one qualification type.

3. Matching is on the basis of attainment at Key Stages 2 and 3, and their characteristics (such as free school meals eligibility and whether they have special educational needs)

Pupils are matched exactly on region and free school meals (FSM) status, and then by statistical similarity (propensity score) on the other variables. The matching process balances the two groups almost perfectly on average – a table giving showing the full effect of carrying out this matching can be found here.

In an ideal world, I’d have determined the two groups based on the science options pupils were taking at the start of Year 10, rather than the end of Year 11, however, this information is not available. Therefore the results will be affected to some degree by dropout. I suspect this will be small but I can’t be sure.

Leave A Comment