Over the past fortnight, secondary schools in England have submitted centre assessment grades for their Year 11 pupils to the exam boards. This has happened in response to GCSEs being cancelled this year.

Coming up with these grades has been a huge undertaking for teachers – one done with minimal guidance and training.

The next step is moderation by exam boards, before grades are issued to pupils in August.

But an exercise carried out by FFT gives an indication of the challenges facing Ofqual and the exam boards.

The data we collected

Between 28 April and 1 June, FFT ran a statistical moderation service which allowed schools to submit preliminary centre assessment grades they were proposing for their pupils. In return they received reports which compared the spread of grades in each subject to historical attainment figures and progress data.

In this blogpost, we’ll take a look at some of the main findings from the service, based on the data of more than 1,900 schools – over half of all state secondaries in England – which had submitted results when the service ended on 1 June.

That’s the date on which the window for secondary schools to submit their proposed grades to the exam boards opened – though it’s worth saying that we don’t know if schools will have submitted the same data to the exam boards as that which we’re analysing here. They may have used the reports they were provided with to amend the mix of grades they were proposing.

Comparing 2020 and 2019 results

Noting that caveat, it is nonetheless worth analysing the data that schools submitted.

We’re going to compare it to published, school-level results for 2019 – only including the results of schools for which we have both 2019 and 2020 data and only looking at subjects for which we have enough data to form reliable conclusions.[1]

So what does this comparison show?

Well, at the top level, this year’s teacher-assessed grades are higher than those awarded in 2019 exams. In every subject we’ve looked at, the average grade proposed for 2020 is higher than the average grade awarded last year. In most subjects, the difference is between 0.3 and 0.6 grades.

Starting with the subjects that almost all pupils sit, the average of all the teacher-assessed grades in English language comes out as 5.1 – that is, a little above a grade 5. That compares to an average grade of 4.7 last year. For English literature, a slightly smaller increase in average grade is seen, from 4.8 last year to 5.0 this year, while in maths the average proposed grade for 2020 is 5.0, compared to 4.7 for 2019.

Looked at another way, were these proposed grades to be confirmed, the share of pupils awarded a grade 4 or above would increase from 71.4% to 80.8% in English language, from 73.7% to 79.0% in English literature, and from 72.5% to 77.6% in maths.

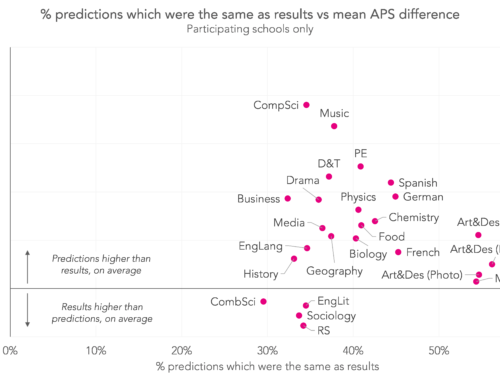

The chart below shows how the proposed results for 2020 compare to 2019’s actual results for all subjects in terms of average grade.

The subject with the biggest difference between average grade awarded in 2019 and proposed for 2020 is computer science, with nearly a grade difference: 5.4 for 2020, compared to an average grade of 4.5 for 2019. Of the 24 subjects we’ve looked at, in 10 of them there’s a difference of half a grade or more between the average proposed grade for 2020 and the average grade awarded in 2019.

At the other end of the scale, the smallest differences between average proposed 2020 grades and 2019 grades are around a third of a grade or less: in English literature, combined science, religious studies, maths and art and design (photography).

The next chart shows the 2019 grade distribution for the individual subjects we’ve looked at, compared to the teacher-assessed figures we have for 2020.

Looking across all subjects, if these grades were given out this summer then we would see the share of grade 9s increase from 4.8% of all grades awarded to 6.3%. The share of results receiving a grade 7 or above would increase from 23.4% of all grades to 28.2%, while the share of results receiving a grade 4 or above would increase from 72.8% to 80.7%.

The upshot

What should we make of this?

Well, first of all it’s worth saying again that we don’t know that these will be the results that schools will have been submitted to the exam boards.

The precise reason that schools submitted data to FFT’s statistical moderation service was to seek some assistance in determining what grades to set. Many will have used the reports that they received to tweak the grades they were proposing before they are submitted to the exam boards.

That said, around 1,000 schools submitted data to FFT two or more times. On average, there was some change in the grades proposed between these different iterations, but in most subjects the impact was relatively small: a reduction in average grade of 0.1 of a grade.

That suggests that the proposed grades submitted to the exam boards will still have been above those awarded last year.

Consequently, it seems likely that Ofqual and the exam boards will have to apply statistical moderation to the grades submitted by schools, bringing them down on average.

This will be a hugely complex task, the likes of which have never been done before. As well as proposed grades, schools were required to submit rank orderings of their pupils, and it seems likely that these will be used to shift some pupils down from one grade to the next.

Reflecting on the difficult task faced by schools

It’s worth taking a moment to consider the difficulty of the task that schools had, and think about why their proposed grades were higher than those awarded last year.

First, in terms of difficulty, teachers were being asked to form an assessment of the level of attainment that each child had reached – taking into account evidence from a range of sources, but done at a time when schooling has been significantly disrupted.

Lest we forget, 9-1 grades for GCSEs also haven’t been around for that many years yet. In some subjects, teachers only have one year of past results to go on. All other things being equal, you would expect the second cohort of pupils taking a qualification to do a bit better than last year’s, as teachers have an extra year of experience under their belts. An approach called comparable outcomes is normally applied to exam results to account for this, but that won’t have been factored in to the proposed grades that schools have come up with.

It’s also much easier to distinguish between two pupils using marks from an exam. As a thought experiment, imagine two pupils thought to be on the 5/4 boundary who have produced a similar quality of work at school. It would be unfair for the teacher to give one a 5 and the other a 4 – but an exam would rule definitively on the matter, for better or worse.

All things said and done, then, schools have had an incredibly difficult task – albeit one matched in difficulty by that now facing the exam boards and Ofqual.

You can read more analysis of this data in two further posts – one looking at centre variability in results, and another looking at the severity of grading of different subjects.

Want to stay up-to-date with the latest research from FFT Education Datalab? Sign up to Datalab’s mailing list to get notifications about new blogposts, or to receive the team’s half-termly newsletter.

1. Only schools with more than 25 entries in a given subject in both 2019 and 2020 have been included in this analysis, and we’re only looking at subjects where there were 100 or more such schools. This leaves us with a total of 1,916 schools in the analysis.

Very interesting. Thank you for this. You can read my views on the problems faced by Ofqual at https://constantinides.net/2020/04/16/award-of-gcses-and-a-levels-in-2020/ and the comments therein, which I’ve kept updated as the details of the standardisation process slowly appear. We await the full standardisation process with bated breath.

There is an important difference between 2019 and 2020 for computer science. In previous years the programming project that requires 20 hours of teaching time but carries no assessment value had to be delivered in year 11, this year it was able to be delivered to the students while they were in year 10, allowing for year 11 to be completely dedicated to exam preparation. It is inevitable that the students will perform better on any interim measures used to try and benchmark their achievement, such as past papers.

Also, we were a little disappointed that the data only looked at one year previous and not a 3 year trend. If a school had a dip in 2019 after an otherwise improving trend (possibly due to cohort profile differences, staffing disruption) they might expect their results to be back on track. Such schools are also likely to respond to a dip in results to put measures in place to ensure they get back on track.

Has prior attainment been factored into the analysis? If the 2020 cohort’s KS2 results are higher in a given subject than last year’s then we would expect higher grades on average for this year’s candidates. The comparable outcomes process would account for this and produce grade boundaries to reflect as such in a normal year. If it is the case, then the centre assessed grades may be more accurate than suggested and Ofqual’s standardisation model may not need to do as much work.

Thanks for the comment, Andy. In an individual subject it’s possible that that’s the case. We see the same pattern across all the subjects we looked at, though, to a greater or lesser extent, which is unlikely to be explained by prior attainment.

At a national level, that wouldn’t really apply, although it would undoubtedly affect some individual schools. This year’s Y11 was the last cohort to have sat the old ‘levels’ KS2, and there was little difference between the 2014 and 2015 results at KS2 – reading saw a very slight drop, maths a slight increase and writing a slight increase. Not enough to account for the differences we’re seeing between 2019 and 2020 data here.

You would expect schools to submit ‘inflated’ grades. Every year pupils under-perform in exams for a multitude of reasons, and under-performance is far more likely than over-performance – very few pupils suddenly understand things in the exam. Under-performance, by definition, is below what teachers would have expected, so you can’t predict it. Therefore, with pupils predicted to perform as expected, you would expect to see better result this year than in other years.

With the Autumn exam series allowing those pupils who feel they have been hard done by to have another go and take the best grade forward, we will have a year group whose overall results will be much higher than previous year groups in any case. The boards strictly standardising to prevent grade inflation is a nonsense that will cause much unnecessary heartache.

Ordinarily at my FE college a certain number of students would fail to turn up for one of the three maths gcse exams. Maybe 5%. This year those students will have been predicted grades based on their ability and the 5% non attendees have been eliminated entirely from the data. This would mean that 5% of the students will score considerably higher with this years system compared to last years as the non attendees were a broad spectrum of abilities.

Has this been considered?

Thanks for your comment, Ian. The 2019 data we’ve used won’t include these pupils. They will be included in the 2020 data at their centre-assessed grade, but it’s unlikely to have a big effect on the national picture – in 2018, we think something like a third of a percent of pupils in state-funded mainstream education didn’t sit their maths exam, for example.

Why is Music not on this data?

Hi Andrew. Only subjects where 100 or more schools with more than 25 entries are included in the analysis – see the footnote on the blogpost. There were only 53 such schools in our sample.

Good stuff, thank you. Three questions, if I may, please…

1. Will you be publishing stats for A level?

2. For GCSE, and supposing that the boards intervene to place the grade boundaries so that the 2020 distribution matches 2019, is it possible to estimate the % of centre assessed grades that would still be confirmed, the % down-graded, and (if any) the % upgraded?

3. You’re very straight about what you did and how you did it, and of the limitations, and you make quite clear that the only comparison is 2019. Do you have any feel for what, if anything, might be different if the boards were also to take 2018 into account, for those subjects graded 9, 8, 7…?

thank you

Hello Dennis. A few quick responses 1- no, we’ve not done a similar exercise for A-level. 2- This is tricky. If you were to start at grade 9 and then lower approx 37% of the 2020 grades we collected you would get something close to the 2019 distribution across all subjects. But we’d expect the Ofqual statistical moderation process to be more complex than this and so might end up raising some grades in some subjects in some schools and lowering others. We’ve not got a feel for how this might work in any real detail so can’t really comment any further. The same would apply to taking account of 2018 results as well.

Hi Dave – thanks for the prompt response, and, yes, that all makes good sense. Your results are most illuminating, so let’s see what happens… Cheers Dennis

Very interesting – thanks so much for this

Will you be splitting the data by gender?

Hi Jon, I don’t think we have any plans to do further analysis of the data for now. It’s something we might be able to look at in August/early September though, as FFT will be running it’s KS4 Early Results Service again this summer.

Hi FFT – I’ve linked to this in a blog posted on HEPI this morning – https://www.hepi.ac.uk/2020/06/18/have-teachers-been-set-up-to-fail/. I hope that’s OK. Thank you, Dennis

That’s fine – thank you, Dennis.

It a shame that you haven’t also included the relative difficulty of subject here. CS is generally about a grade harder than other subjects.

You can find some discussion of the relative severity of grading in this post, which is part of the same series of posts: https://ffteducationdatalab.org.uk/2020/06/gcse-results-2020-the-relative-severity-of-proposed-grades/.

This is really interesting. Thank you.

As you know, exam boards had committed to bringing German and French results in line with other subjects‘ results this year. It will be interestingly to see whether and how they will now actually do this. One way of doing it would be to keep the German and French teacher predictions as they are, even if they decide to reduce other subjects‘ grades. This would go some way to bringing German and French in line. German grades in 2019 a whole grade below English Language. French 0.85 of a grade below. Have you any ideas? What do you think they might do? What would be the fairest way?

The GCSE and A level pupils have lived through an extraordinary time. They have lived through considerable uncertainty, and have been out of school now for months – not knowing what the implications are for sixth form or university. Many pupils really up their game from Jan to June studying hard for exams – and their marks usually improve accordingly. It’s the period of time where teachers spend time supporting consolidation of learning and help prepare for what’s next. These pupils have missed out on all this learning, and have had to adapt to isolation, uncertainty, sometimes illness and considerable fear. This experience will possibly help build resilience in some children, but equally we have seen and read the many reports on impacts on mental health. At this unique time we would expect the Ministry of Education to be more understanding than punitive.

Thank you for your interesting report. An important factor which needs to be taken into consideration is that some schools have moved to a three-year GCSE course in an attempt to allow pupils to achieve higher grades and this can not then just be moderated downwards to fit with the attainment of previous years.

Intriguing report thank you. When looking at the data for Drama we took into account the variance in grade boundaries which changed significantly from 2018 to 2019 and averaged a C-19 response from there which did include an increase on last year alongside a stronger cohort. Variances in subjectivity where NEA coursework is usually marked by visiting examiners and not having been executed this year may also be factors. It would be interesting to see a presentation of the Music data as it stands with the 53 submissions.

I think it’s also important to remember that the 2020 cohort would have been the 1st cohort to take the reformed examinations as part of a 3 year GCSE cohort, rather than a 2-year cohort. The majority of schools in my LA have switched to 3-year courses so that by 2020 and onwards, all their students will have taken their exams as part of a 3-year course – surely this would cause an increase in performance in the cohort? At least more than that of the rise in performance from 2018-2019 where most were still on 2-year courses? If what I’m saying has any merit then that means 2020 would have seen a sharper than usual increase in the quality of exam scripts due to more preparation and time and more time to learn the facts(which the reforms have a heavy emphasis on), and if there was an increase in the quality of exam scripts then Ofqual examiners would surely see fit as to increase the proportion of students achieving better grades, which would partly explain the increase in grade “generosity” for 2020 that the above data suggests. This is especially true for subjects such as Computing, Business Studies and DT, which aren’t properly taught until a student actually starts their GCSE course, meaning that extra year that the 2020 cohort would have had, would have been extremely beneficial, especially since those subjects are content, rather than analysis rich. I’m only a yr11 student so take what I say with a pinch of salt, but I’d love to hear your thoughts on my theory 🙂

I have been Head of Department and taught GCSE Fine Art at the same centre for 25 years. Our cohort size has been relatively consistent (approximately 40 students per year) throughout, as has our teaching staff and approach to delivery. We have also remained with the same exam board throughout. We have been consistently accurate (within tolerance) in our assessment during all of that period and, on the occasion that a moderator changed our marks, an appeal subsequently reinstated our centre’s assessment decisions.

All of our results have fluctuated significantly from year to year during that period. A* (or 9/8 in new spec) percentages from 2001 for example are as follows: 21 27 13 25 17 43 22 23 38 28 36 52 43 23 28 38 69 45. Our A level performances fluctuate in a similar way. If the subject is delivered properly and students are encouraged to experiment and take genuine risks rather than follow a prescriptive ‘house style’ approach, then significant differences in performance are typical from one year to the next.

It is wrong therefore to statistically impose grades in art through the application of a formula based on centre’s attainment figures in previous years, particularly when there is much fairer means to calculate the 2020 art grades that students deserve. By the time of lock-down, most would have completed approximately 75% of the work that they would have been assessed against. This will have included all of their coursework (60%) and half of their prep work for the exam (-exam performance only counts as a relatively small fraction (25%) of the overall assessment criteria.)

OFQUAL’s refusal to accept this as grounds for appeal against changes to CAGs is blatantly unfair. So too is their insistence on the completion of a new task in the Autumn series.

Here is my concern if we are to truelove believe the projected scores that even with with three months less tuition this particular academic year where destined to achieve better than any previous year history had the pandemic not happened!

!) There is the potential for Universities will be taking on students with gaps in their knowledge who are simply not prepared or equipped. increasing the stress and workload on undergraduates and lecturers, The proof will be in to see the figures for degree results for this Super Set will they make the potential their teachers had in them?

2) What if employers simply don’t believe this is the single brightest year group and simply rate the same grade in other years as better and employ them?

3) Alternatively what happens to the 2021 leavers if the grades fall back to historical levels with actual exams, will they be passed over for a 2020 students?

Don’t get me wrong I believe that the hardest working students from the historically worst performing schools will have been very hard done by with the algorithm. However I am equally sure this plan will have consequences. My belief is plans for an Individual reviews programme albeit at a higher cost, rather than a free for all should have been

I tend to agree with you Robin and fear that along with what you point in in bullet 1 we could see a high drop out rate of year 1 university students and the student debt associated to it which would be a terrible consequence.

Contrary to media and popular opinion I suspect that the algorithm probably got the right result in the majority of cases and with a pre results appeal process for schools and post results appeal process for pupils, would have provided a significantly better outcome than we are witnessing

I truly hope I’m wrong and the best outcome has been achieved….only time will tell

Thanks for this. Has this data been split by race and gender? Is there raw data anywhere that would allow this to be done?

Hi Tom, thanks for your comment. We didn’t have permission to match the data to pupil characteristics – and I’m afraid that we’ve not made the data available for further analysis. It was collected from schools on the understanding that it could be used for research, but not that it would be transmitted on to other parties.