A version of this blogpost also appears on the Centre for Education Economics website.

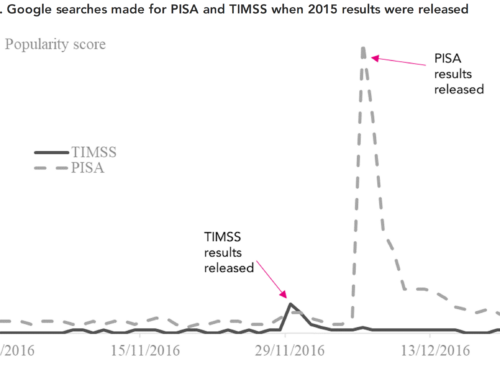

The OECD’s PISA study compares the science, reading and mathematics skills of 15-year-olds across countries, with the results closely watched by journalists, public policymakers and the general public from across the world.

Conducted every three years, particular attention is now being paid to how the PISA scores of each country are changing over time.

For instance, are the academic skills of young people in some countries improving, while in others they are in relative decline?

Of course, to answer such questions robustly, fair and comparable measures of children’s skills (both across countries and over time) is key.

Yet, a major change was made to PISA in 2015 which has the potential to put such comparability of measures into doubt.

A change in approach

Young people in some, but not all, countries took the PISA assessment on computers for the very first time.

Specifically, PISA 2015 was conducted on computer in 58 nations, with the other 14 continuing to use a standard paper-and-pencil test (the usual practice across all countries in the five PISA cycles between 2000 and 2012).

Therefore, in order for us to retain faith in the comparability of the PISA study, it is vital we establish what impact this major change has had.

Unfortunately, the official documentation on this point is not completely clear, nor is it particularly transparent. If one delves into the depths of the technical appendices [PDF], the OECD has provided some discussion of these possible ‘mode effects’, along with some wonkish discussion of how these have been dealt with.

But what does this actually mean?

It turns out that in the PISA 2015 field test, a randomised controlled trial (RCT) was conducted across multiple countries in order to establish the likely impact of PISA switching from paper to computer assessment. Specifically, a subset of children who took part in the field trial were randomly assigned to complete either a paper or a computer version of the PISA test, with the results across these two groups to be compared.

Yet little clear and transparent evidence has been published on these results – what exactly did these RCTs show?

What data from Germany, Sweden and Ireland can teach us

In my new study, forthcoming in the Oxford Review of Education, we provide evidence from the RCTs conducted in three countries – Germany, Sweden and the Republic of Ireland.

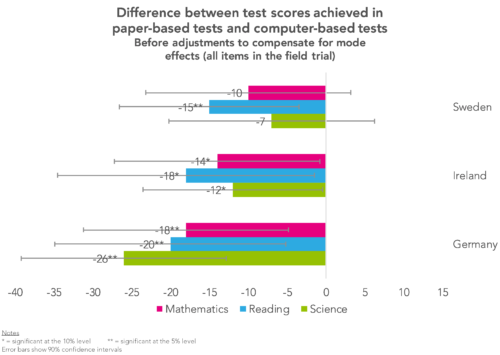

The chart below illustrates the results – highlighting how the mode effect is HUGE.

Children who took the computer version of the PISA test scored much lower than their peers who were randomly assigned to the paper version, with the difference sometimes equivalent to around 20 PISA test points (around six months of additional schooling).

This is clearly an important result, and one that, in my opinion, should have been more transparently published by the OECD.

Fortunately, realising the seriousness of this issue, the PISA survey organizers have attempted to adjust the main PISA 2015 results to compensate for such mode effects.

Yet there has again been relatively little detail published on how well such corrections have worked.

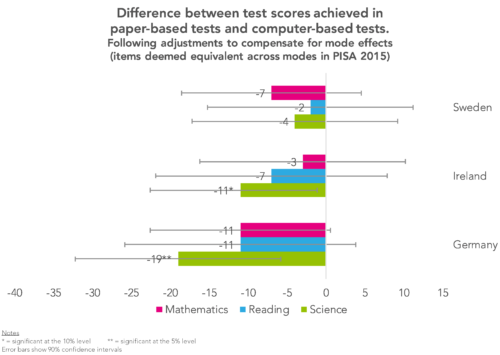

My new paper describes the methodology the OECD has used, and considers how well it has managed to address this comparability problem. The key findings are presented in the chart below.

The good news is that the correction applied by the OECD has seemed to help the situation – after applying the essence of their correction, the mode effect observed shrinks. Yet it also has not completely eradicated the problem. A post-correction difference of 10 PISA test points between paper and computer groups remains relatively common.

What are the key take-away messages, then?

First, caution is needed when comparing the computer-based PISA 2015 results to the (paper-based) results from previous cycles. For instance, could this help explain why Scotland apparently fell-down the PISA rankings in 2015? There is not enough evidence currently available to know – but I don’t think we can currently rule comparability problems out. Indeed, the OECD itself notes in its international report (page 187) that is not possible to rule small to moderate mode effects out. (Quite what the OECD exactly mean by “small” and “moderate” has, however, not been defined).

Second, our paper serves as an important case study as to why we should all not blindly believe the OECD when they tell us things are “comparable”. Indeed, in many ways, their failure to really publish transparent evidence on this issue has severely undermined any such claims that they make. That is why continuing close academic scrutiny of PISA and other such international studies is warranted, while I continue to urge the OECD to publish the PISA 2015 field trial data from all countries, and to put more transparent evidence on this issue of mode effects into the public domain.

Find the paper on which this blogpost is based here.

Want to stay up-to-date with the latest research from Education Datalab? Sign up to our mailing list to get notifications about new blogposts, or to receive our half-termly newsletter.

This is an intriguing analysis but raises further questions. If 58 nations ran the test on a computer, why are only three countries analysed here? And what is a plausible explanation for the mode of assessment affecting science more? Certainly a important area for exploration.